- Published on

Exporting Kubernetes Logs to Linode S3 using FluentBit

- Authors

If you have a requirement to export logs for archive but don't require centralised querying or indexing that a more sophisticated solution such as Grafana Loki provides, it's possible to export logs to multiple destinations using FluentBit.

Overview

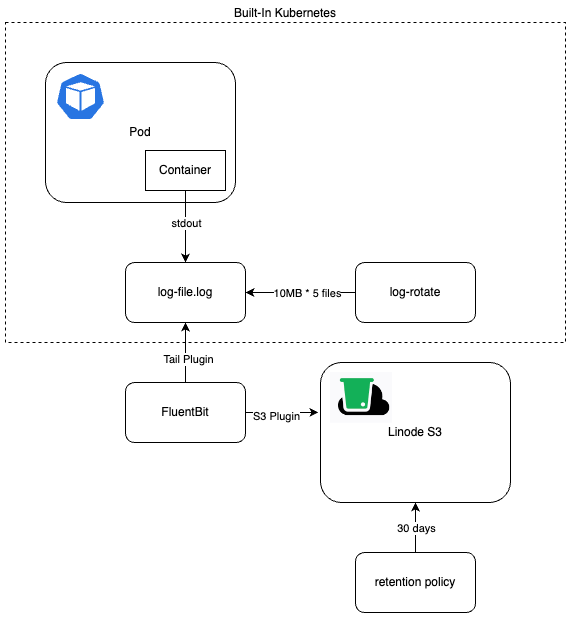

The architecture is shown below. Kubernetes by default rotates logs based on file size. Our aim is to export these logs to S3 for archive. We want to set a retention policy on the bucket of 30 days.

Kubernetes Logging

Kubernetes default behaviour is defined here. Kubernetes logs and application logs are stored via the underlying container runtime on each Node. The kubelet is responsible for rotating container logs and managing the logging directory structure. The kubelet sends this information to the container runtime (using CRI), and the runtime writes the container logs to the given location.

These logs are rotated based on two environment variables:

- containerLogMaxSize (default 10Mi)

- containerLogMaxFiles (default 5)

These settings let you configure the maximum size for each log file and the maximum number of files allowed for each container respectively. Crucially, using the command kubectl logs will only ever return the latest file. The others would need to be accessed directly.

Our requirement is to extend this functionality to make these logs persist for 30 days regardless of size.

FluentBit

FluentBit is a logging and metrics processor and forwarder. It can connect to multiple sources, allows you to enrich and filter incoming data and forward to a range of destinations. It's focus is on performance and efficiency which suits situations where resources are limited.

Comparison to FluentD

My understanding is FluentBit is the next-generation version of FluentD, heavily optimised. FluentD supports a wider range of connectors. A full comparison is provided here.

Tail Plugin

The tail plugin allows you to monitor one or several text files. We define an input block to leverage this plugin and point to log files generated by Kubernetes or Applications:

[INPUT]

Name tail

Path /var/log/containers/*.log

Parser docker

Tag kube.*

Mem_Buf_Limit 5MB

Skip_Long_Lines On

S3 Plugin

The s3 plugin allows you to forward logs to an S3 compatible bucket. In our case we are using Linode Object Storage.

[OUTPUT]

Name s3

Match kube.*

bucket logs

region *region*

endpoint https://*region*.linodeobjects.com

total_file_size 250M

s3_key_format /$TAG[2]/$TAG[0]/%Y/%m/%d/%H/%M/%S/$UUID.gz

s3_key_format_tag_delimiters .-

Deployment

Kubernetes logs for an application are stored on it's underlying node. This means we need to deploy FluentBit as a DaemonSet, ensuring an instance of FluentBit runs once on each node.

Here is an example deployment manifest:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluent-bit

namespace: logging

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluent-bit-logging

template:

metadata:

labels:

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "2020"

prometheus.io/path: /api/v1/metrics/prometheus

spec:

containers:

- name: fluent-bit

image: fluent/fluent-bit:3.0

imagePullPolicy: Always

ports:

- containerPort: 2020

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

terminationGracePeriodSeconds: 10

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluent-bit-config

configMap:

name: fluent-bit-config

serviceAccountName: fluent-bit

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

effect: "NoSchedule"

Linode S3

Linode provides an S3 compatible Object Storage service which is cost-effective in this context. It also allows you to specify a lifecycle policy for blobs, which satisfies our requirement to hold logs for 30 days.

Policies are defined in XML such as:

<LifecycleConfiguration>

<Rule>

<ID>delete-all-objects</ID>

<Filter>

<Prefix></Prefix>

</Filter>

<Status>Enabled</Status>

<Expiration>

<Days>30</Days>

</Expiration>

</Rule>

</LifecycleConfiguration>